documentation

Pivoting:

Pivoting:

I was really interested in further exploring ML Agents within Unity after our crash course a couple weeks ago. I wanted to keep playing around with it, in hopes to train my very first ML Agent and keep it as a backup Project C.

I first started with cloning the Unity ML Agents Toolkit repo and downloading Python through Miniconda, as well as all the other necessary dependencies (all which I could not have done without my instructor John!) After this, I was testing to see if I could take the already trained agent and change simple behavioral setups, ie rewarding for the opposite of what it used to do.

This led some funny results but I wanted to take a step further and try to train my own agent. The idea was the following: take an existing .yaml file from the example projects and implement a few modifications to it. I didn't really know at the time what I wanted my final agent to behave like, but I think that was exactly what drew me into ML Agents in the first place.

Setting up the environment:

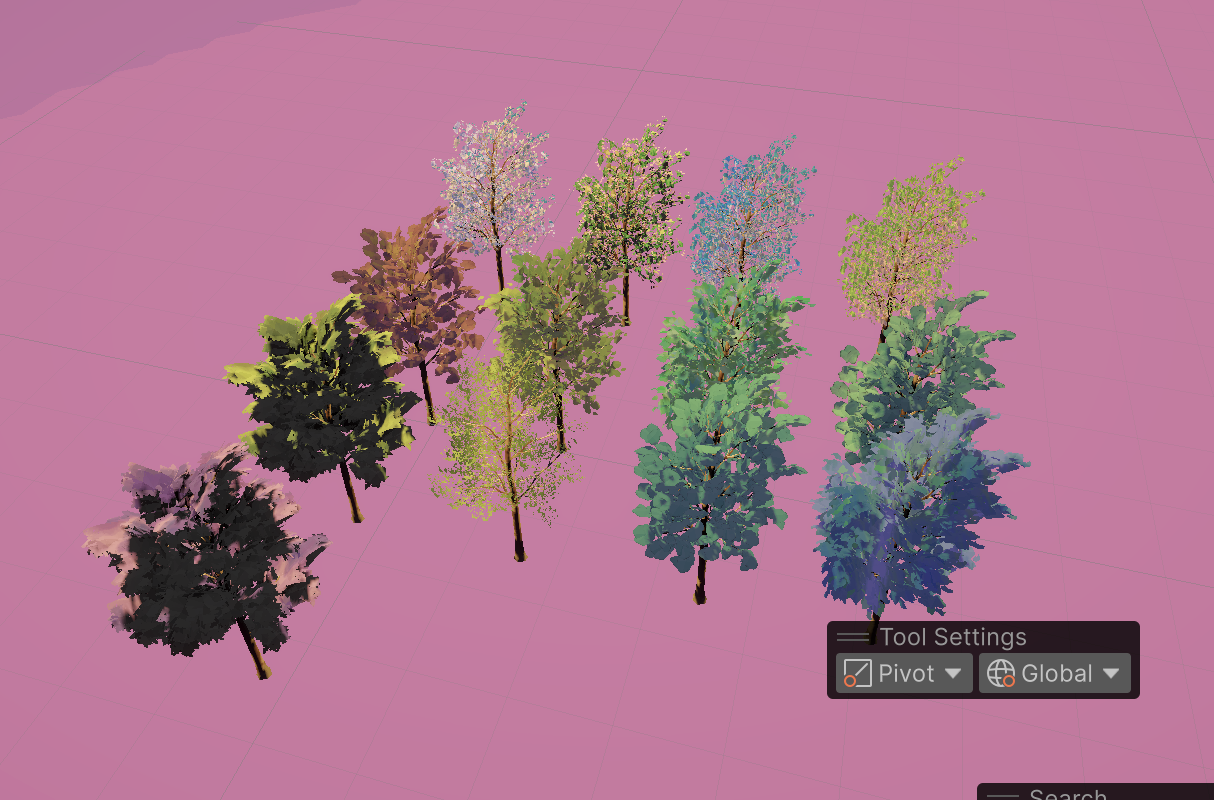

My agent had to live somewhere. In approaching this project, I went back to re-visit Ian Cheng's series on artificial lifeforms -- I found BOB's world so visually striking. For my environment, I immediately thought of forests --- I think forests have been somewhat my go-to choice this semester for our course.

And so, after building a simple terrain, I added trees and other plant lifeforms, all with a watercolor-y shader. For the skybox, I reused some of the skyboxes from my midterm project, Enfut, because I thought the light purple hues would be well fitting in this new agent's forest.

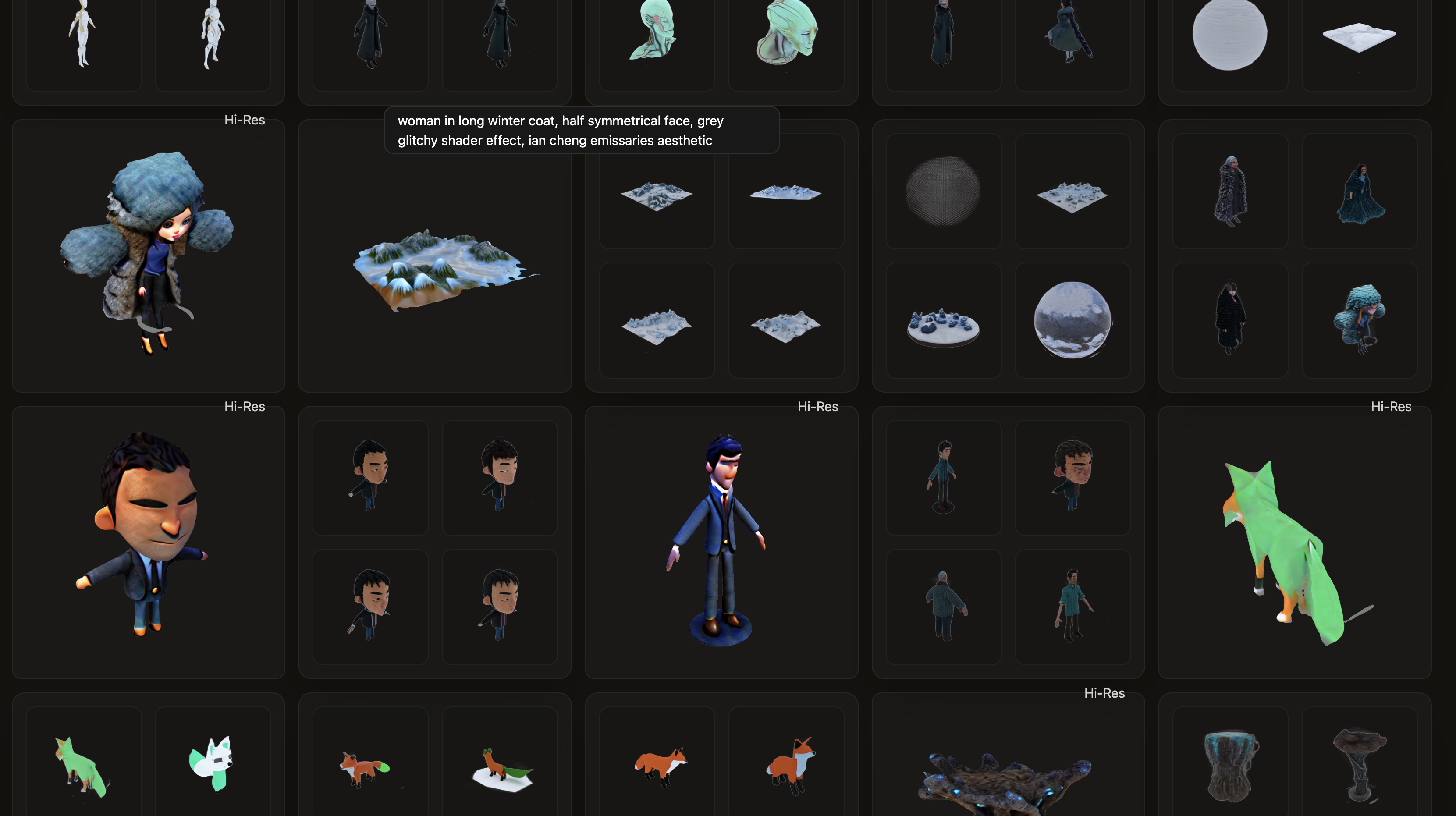

As for the agents, my first approach was an AI - generate character. My initial initial idea was to create an entirely synthetic environment, including even the terrain; however, I soon realized that trying to rig an AI character model can be quite frustrating. and that is how I ended up at the earlier point.

Configuration:

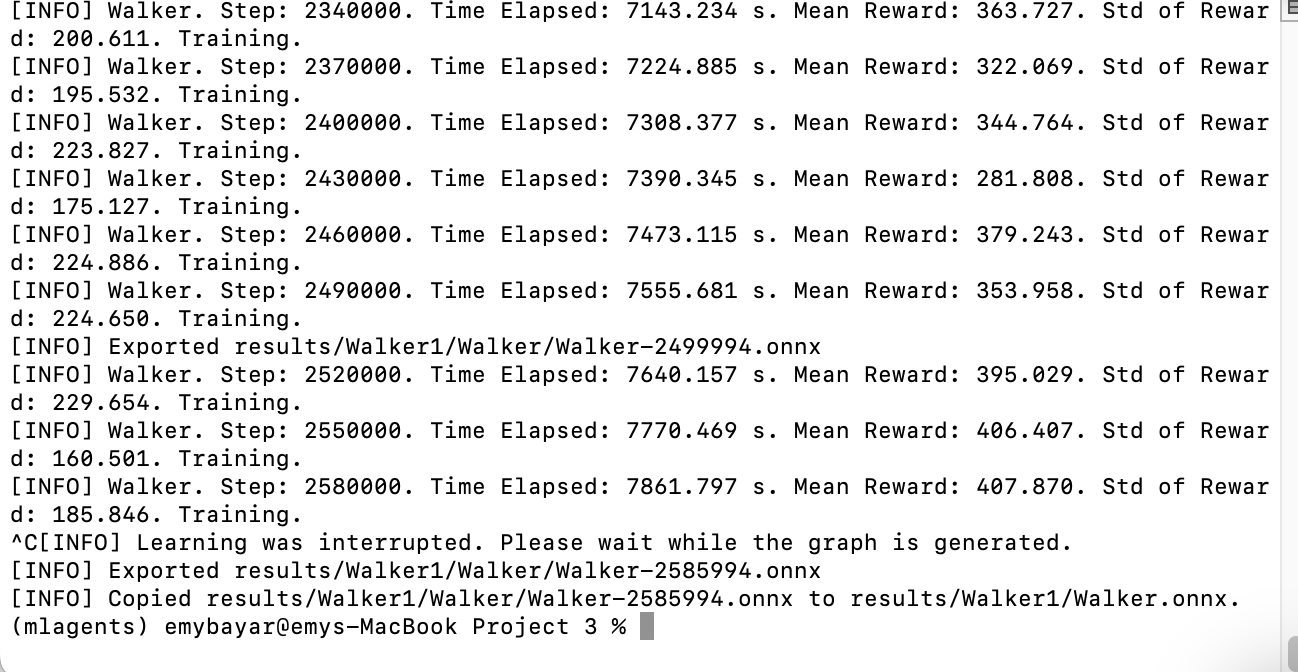

I was under the assumption that by importing the ml-agents folder into my project folder, I could immediately start training them! After updating the ragdoll prefab, I realized I did not define a clear hierarchy within the prefab or attached necessary scripts to each joint. I created a training 'gym' and started training my agents to find my agents directly falling to the ground as they did not know how to walk.

Around 80000th step, the agent figured out how to roll over sometimes, and I think depending on how it falls after spawning determines whether it should do so.

After a couple more training session, the agent had developed a crawling-on-the-back locomotive to try and reach the target (should've decreased the max_steps variable for faster iterations).

Here are some videos from during the training.

Accessing API:

I added a script that automatically takes a screenshot of the agent during gameplay. The idea was to pst them directly to an Instagram account -- but I was prompted to change my account password every time the API tried to access it.

Other Elements:

Audio-Reactive - Inspired by the idea of building a more immersive world for the agent to live in, I added a mic-reactive music system. I wrote a simple Unity script that uses microphone input to control the environment’s background music in real-time. It works by continuously sampling mic input, calculating a rough loudness level, and then adjusting both the low-pass filter and the music volume based on how loud the world outside the computer is.

Skybox updates everytime a target is reached; a ping sound gets triggered as well.

IMA Show:

Leave a comment

Log in with itch.io to leave a comment.